Network Latency: Definition, Causes and Best Practices

Keeping network latency at a minimum is a priority for any IT business's bottom line. Lag and slow loading times are deal-breakers for customers, so if you do not provide users with a latency-free experience, one of your competitors surely will.

This article is a complete guide to network latency that covers everything there is to know about one of the leading causes of poor user experience (UX). Read on to learn why companies invest heavily into infrastructure to lower network latency and see what it takes to ensure optimal network response times.

What Is Latency in Networking?

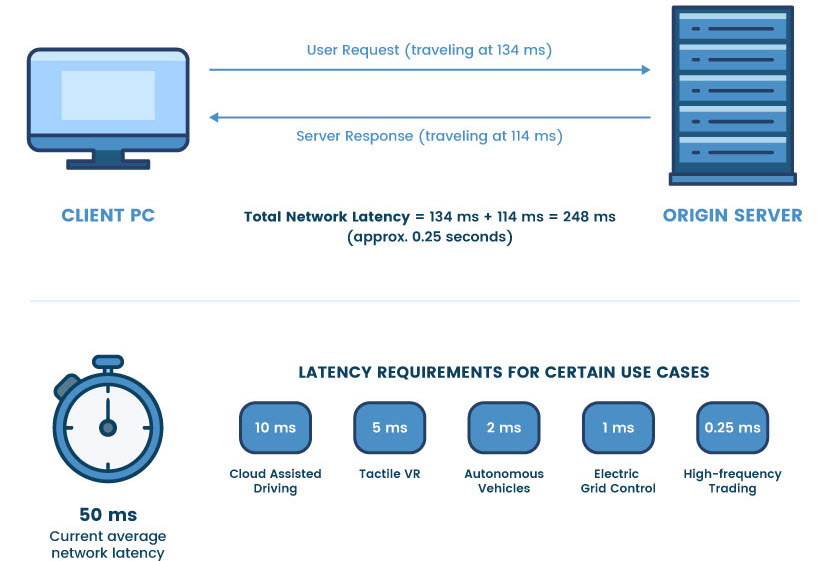

Network latency (or lag) measures the time it takes for a client device to send data to the origin server and receive a response. In other words, network latency is the delay between when the user takes action (like clicking on a link) and the moment a reply arrives from the server.

Even processes that seem instantaneous have some delay. Let's say a user is shopping on an ecommerce website - clicking the Add to Cart button sets off the following chain of events:

- The web browser initiates a request to the website's servers (the clock for latency starts now).

- Data request moves through the Internet, hopping between switches and routers to reach the server.

- The request arrives at the site's web server.

- The server replies to the request with relevant info.

- Data response travels back through the Internet, making the same "pit stops" along the way.

- The reply reaches the browser, which adds the item to the cart.

Ensuring these types of communication occur as quickly as possible has become a vital business KPI. The closer the latency is to zero, the faster and more responsive your network becomes, and you provide a better UX. There are two general levels of network latency:

- Low network latency (desirable): Small delays lead to fast loading and response rates.

- High network latency (not desirable): High latency slows down performance and causes network bottlenecks.

Each use case has different latency standards. For example, an app for video calls requires low latency, whereas an email server can operate within a higher latency range. Common signs of high latency include:

- Websites loading slowly or not at all.

- Video and audio stream interruptions.

- Data takes a long time to send (e.g., an email with a sizable attachment).

- Apps keep freezing.

- Accessing servers or web-based apps is slow.

Interested in learning about network security? Here are two ideal starting points—our intro to network security and a guide to network infrastructure security.

Latency vs. Bandwidth vs. Throughput

Latency, bandwidth, and throughput are three closely related metrics that together determine the speed of a network:

- Network latency measures how long it takes for a computer to send a request and receive a response.

- Bandwidth is the maximum amount of data that can pass through the network at any given time.

- Throughput is the average amount of data that passes through the network over a specific period.

The most significant difference between the three is that latency is a time-based metric, whereas bandwidth and throughput measure data quantity.

While each concept stands for itself, you must not consider latency, bandwidth, and throughput in a vacuum. The three affect each other and are highly related - if a network was a water pipe, each concept would play a vital part in carrying water:

- Bandwidth would be the width of the pipe.

- Latency would be the speed with which water moves through the pipe.

- Throughput would be the amount of water that passes over a specified time.

Good network performance requires suitable bandwidth, good throughput, and low latency. You cannot have one (or two) of the three and expect a high network speed.

Some admins use latency and ping interchangeably, which is conceptually wrong. Ping measures the time data takes to move from the user to the server, a metric that reveals slightly less than half of actual network latency.

Why Does Network Latency Matter?

Network latency directly impacts a business (and its bottom line) as it affects how users interact with the services provided. Customers have little to no patience for lag, as shown by the following numbers:

- One in four site visitors abandons a website that takes more than 4 seconds to load.

- Almost 46% of users do not revisit a poorly performing website.

- Over 64% of e-shoppers do not repeat purchases on a slow website.

- Every second in load-time delay reduces customer satisfaction by 16%.

- Every two-second loading time results in almost 10% fewer users.

- Nearly 50% of users say they would uninstall an app if it "regularly ran slowly."

In addition to higher conversion and lower bounce rates, low-latency networks improve a company's agility and enable quick reactions to changing market conditions and demands.

Network latency matters more in some use cases than others. Ensuring top-tier network speed is not critical for music apps or casual surfing. Low latency is vital for use cases that involve high volumes of traffic or have a low tolerance for lag, such as:

- Interactive video conference and online meeting apps.

- Online gaming use cases (especially those running over the Internet without a dedicated server for gaming).

- Apps in financial markets with high-frequency trading.

- Live event streaming.

- Self-driving vehicles.

- Apps responsible for measuring pipe pressure in oil rigs.

- Hybrid cloud apps spread across on-site server rooms, cloud, and edge locations.

Planning to make changes to your network to improve latency? Perform a network security audit after the update to ensure new features and tweaks did not introduce exploits to the network.

How to Measure Network Latency?

Network admins measure latency by analyzing the back-and-forth communication between the client device and the data center storing the host server. There are two metrics for measuring network latency:

- Time to First Byte (TTFB): TTFB measures the time between the moment a client sends a request and when the first byte of data reaches the origin server. TTFB is one of the primary server responsiveness metrics.

- Round Trip Time (RTT): RTT (also called Round Trip Delay (RTD)) is the time it takes for a data packet to travel from the user's browser to a network server and back again.

Admins typically measure TTFB and RTT in milliseconds (ms), but some ultra-low latency networks require analysis in nanoseconds (ns or nsec). Some of the most impactful factors for TTFB and RTT are:

- The distance between the client device and the web server.

- Transmission medium (copper cable, optical fiber, wireless, satellite, etc.).

- The number of network hops (the more intermediate routers or servers process a request, the more latency you should expect).

- Available bandwidth and the current traffic levels.

There are three primary methods to measure network latency:

- Ping (tests the reachability of a host on an Internet Protocol network and reveals approximately half the value of network latency).

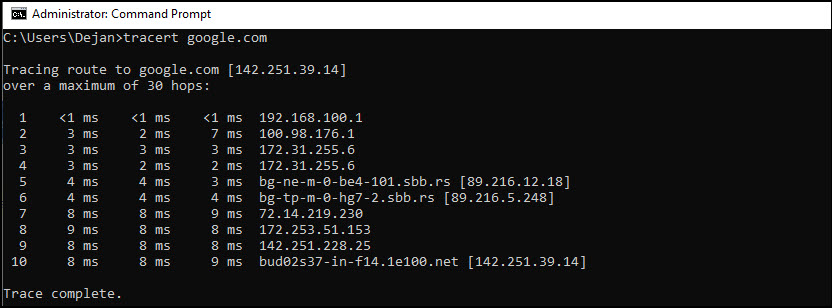

- Traceroute (tests reachability and tracks the path packets take to reach the host).

- MTR (My Traceroute is a more in-depth mix of Ping and Traceroute methods).

Traceroute is the easiest way to measure latency. Open Command Prompt, and type tracert followed by the destination address you want to test, as we do here:

Once you type in the command, you'll see a list with all the routers in the network path leading to the destination server. You'll also get a time calculation in milliseconds for every network hop.

Boost your network diagnostics by learning how to run traceroute on Linux, Windows, and macOS.

Causes of Network Latency

Here are the most common causes of network latency:

- Distance: The distance between the device sending the request and the server is the prime cause of network latency.

- Heavy traffic: Too much traffic consumes bandwidth and leads to latency.

- Packet size: Large network payloads (such as those carrying video data) take longer to send than small ones.

- Packet loss and jitter: A high percentage of packets failing to reach the destination or too much variation in packet travel time also increases network latency.

- User-related problems: A weak network signal and other client-related issues (such as being low on memory or having slow CPU cycles) are common culprits behind latency.

- Too many network hops: High numbers of network hops cause latency, such as when data must go through various ISPs, firewalls, routers, switches, load balancers, intrusion detection systems, etc.

- Gateway edits: Network latency grows if too many gateway nodes edit the packet (such as changing hop counts in the TTL field).

- Hardware issues: Outdated equipment (especially routers) are a common cause of network latency.

- DNS errors: A faulty domain name system server might slow down a network or lead to 404 errors and incorrect pathways.

- Internet connection type: Different transmission mediums have different latency capabilities (DSL, cable, and fiber tend to have low lag (in the 10-42ms range), while satellite has higher latency).

- Malware: Malware infections and similar cyber attacks also slow down networks.

- Poorly designed websites: A page that carries heavy content (like too many HD images), loads files from a third party, or relies on an over-utilized backend database performs more slowly than a well-optimized website.

- Poor web-hosting service: Shared hosting often cause latency, while dedicated servers typically do not suffer lag.

- Acts of God: Heavy rain, hurricanes, and stormy weather disturb signals and lead to lag.

One way to permanently fix your app's latency-related issues is to deploy an edge server—phoenix NAP's edge computing solutions enable you to deploy hardware closer to the source of data (or users) and drastically cut down your RTTs.

How to Reduce Network Latency?

Here are the most effective methods for lowering network latency:

- Use traffic shaping, Quality of Service (QoS), and bandwidth allocation to prioritize traffic and improve the performance of mission-critical network segments.

- Use load balancing to avoid congestion and offload traffic to network parts with the capacity to handle the additional activity.

- Ensure network users and apps are not unnecessarily using up bandwidth and placing pressure on the network.

- Use edge servers to reduce the quantity of data that needs to travel from the network's edge to the origin server. That way, most real time data processing occurs near the source of data without sending it all to the central server.

- Use a CDN to cache content in multiple locations worldwide and quickly serve it to users. CDN stores data closer to end-users, so requests do not have to travel to the origin server.

- Reduce the size of content to improve website and app performance. Common methods include minimizing the number of render-blocking resources, lowering the size of images, and using code minification to reduce the size of JavaScript and CSS files.

- Optimize the Domain Name System server to prevent network slowdowns.

- Consider subnetting (grouping endpoints that communicate frequently) to reduce latency across the network.

- Set up a page to strategically load certain assets first to improve perceived page performance. A common technique is to first load the above-the-fold area of a page. That way, the end-user starts interacting with the page before the rest of it finishes loading.

- Ensure your backend database does not slow down the website with large tables, complex calculations, long fields, and improper use of indexes.

- Use HTTP/2 to cut down latency by simultaneously loading multiple page elements.

- Rely on network tools to track latency and resolve lag-related issues (packet sniffing, NetFlow analysis, SNMP monitoring, CBQoS monitoring, etc.).

- Keep all software up to date with the latest patches (do not forget to keep an eye on router and modem updates).

Are your cloud resources causing too much latency? While edge computing may help, sometimes the best solution is to turn to cloud repatriation and bring the workloads from public clouds to bare metal servers.

Keeping Network Latency in Check is a Must for Your UX

You cannot eliminate network latency, but you should make strides to minimize lag and keep it under control. Fast and responsive connections directly impact lead generation and user retention rates, making investments in lowering latency a no-brainer decision for anyone looking to maximize profit.