Edge Computing Challenges and How to Solve Them

As smart cars, smart home devices, and connected industrial equipment grow both in number and popularity, they generate data practically everywhere. In fact, there are more than 16.4 billion connected IoT (Internet of Things) devices worldwide in 2022 and the number is expected to skyrocket to 30.9 billion by 2025. By then, IDC predicts that these devices will generate 73.1 zettabytes of data globally, which is a 300% growth compared to the not-so-distant 2019.

Sorting and analyzing this data quickly and effectively is key to both optimal application user experience and better business decision-making. Edge computing is the technology making this happen.

However, deploying modern workloads such as microservices, machine learning apps, and AI close to the edge brings a number of challenges an organization’s infrastructure needs to address. To benefit from edge computing, businesses need to find a perfect balance between their IT infrastructure and their end-user needs.

Learn more about the differences between edge and cloud computing architecture.

Challenges of Edge Computing

To perform optimally, edge workloads require the following:

- Proximity – Storage and compute resources need to be close to the data source.

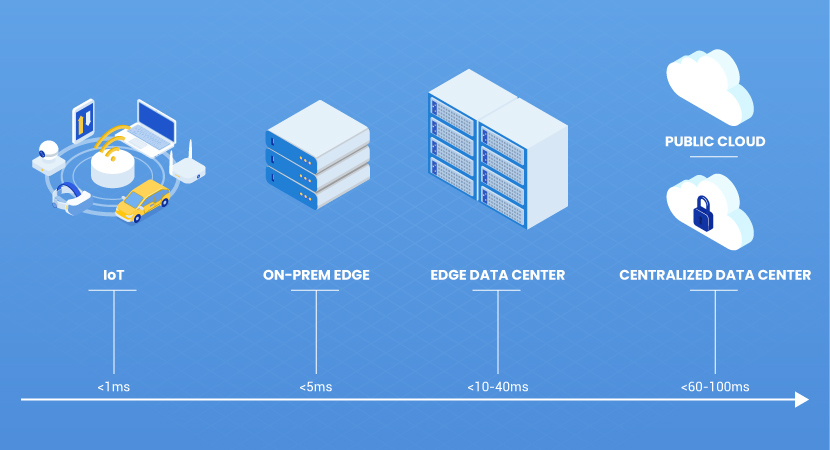

- Responsiveness – Apps need data transfer latencies to go from under 5 to 20 milliseconds.

- Mobility – Many edge devices move and compute and storage resources need to follow.

The data transfer latency of remote and centralized public cloud computing model does not cater to the needs of modern edge workloads. On the other hand, building a decentralized network to support edge workloads comes with an intimidating list of its own challenges.

Logistics Complexities

Managing disparate edge compute, network, and storage systems is complex and requires having experienced IT staff available at multiple geographical locations at the same time. This takes time and puts a significant financial strain on organizations, especially when running hundreds of container clusters, with different microservices served from different edge locations at different times.

Bandwidth Bottlenecks

According to a report by Morgan Stanley, radars, sensors, and cameras of autonomous vehicles alone are expected to generate up to 40 TB of data an hour. To make life-saving decisions fast, the data these four-wheeled supercomputers create needs to be transferred and analyzed within fractions of a second.

Similarly, numerous edge devices collect and process data simultaneously. Sending such raw data to the cloud can compromise security and is often inefficient and cost-prohibitive.

To optimize bandwidth costs, organizations typically allocate higher bandwidth to data centers and lower to the endpoints. This makes uplink speed a bottleneck with applications pushing data from the cloud to the edge while the edge data simultaneously goes the other way around. As the edge infrastructure grows, IoT traffic increases and insufficient bandwidth causes massive latency.

Limited Capability and Scaling Complexities

The smaller form factor of edge devices typically leads to a lack of power and compute resources necessary for advanced analytics or data-intensive workloads.

Also, the remote and heterogenous nature of edge computing makes physical infrastructure scaling a major challenge. Scaling at the edge does not just mean adding more hardware at the source of the data. Rather, it extends to scaling staff, data management, security, licensing, and monitoring resources. If not planned and executed correctly, such horizontal scaling can lead to increased costs due to overprovisioning, or suboptimal application performance due to insufficient resources.

Data Security

As computing moves to the edge, infrastructure goes beyond the multiple physical and virtual layers of network security offered by centralized computing models. If not adequately protected, the edge becomes a target for various cyber threats.

Malicious actors can inject unauthorized code or even replicate entire nodes, stealing data and tampering with it while flying under the radar. They can also interfere with data transferred across the network via routing information attacks affecting throughput, latency, and data paths through data deletion and replacement. Another common data security threat at the edge is DDoS attacks aimed at overwhelming nodes to cause battery drain or exhaust communication, computation, and storage resources. Out of all the data IoT devices collect, only the most critical data needs to be analyzed. Without secure and compliant long-term data retention and archival solutions, this often leads to excessive data accumulation and data sprawl at the edge, further increasing vulnerability.

Data Access Control

The fact that edge devices are physically isolated means that, in this distributed computing system, data is handled by different devices, which increases security risks and makes data access difficult to monitor, authenticate and authorize.

Privacy of an end-user or end device is another critical aspect to maintain. Multi-level policies are required to ensure every end-user is accounted for. Making this possible while meeting real-time data latency requirements is a major challenge, especially when building edge infrastructure from scratch.

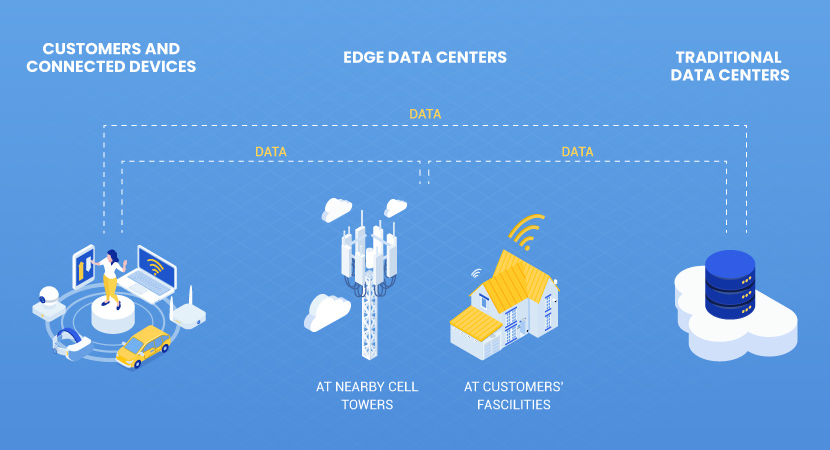

Having a physically close, pre-configured solution built by an ecosystem of partners offering optimized hardware and infrastructure management tools mitigates edge computing challenges. Edge data centers are an emerging solution that addresses this by offering organizations high-performance data storage and processing resources on a secure and fast network.

Edge Infrastructure to the Rescue

In comparison to the cloud, edge infrastructure is located in smaller facilities close to the end-user network. By bringing compute, storage, and network resources closer to the source of the data, edge data centers offer various benefits to both organizations and their workloads.

These include:

- Minimized latency due to physical proximity – Improving data delivery and reducing application response time.

- Enhanced security and privacy – High levels of physical and cyber security on-prem, less data uploaded to the cloud, and reduced amount of vulnerable data in transit.

- Increased reliability – Redistributing workloads to smaller data centers takes the load off central servers increasing performance and data availability.

- Cost optimization – With managed services and pre-configured infrastructure solutions, edge data centers help organizations reduce TCO and cut IT costs.

As a global IaaS provider, phoenixNAP recognizes the importance of bringing powerful compute and storage resources close to the edge and making them easily accessible and scalable around the globe. To further expand the availability of our Bare Metal Cloud platform, we partnered with American Tower to launch our first edge location in Austin, Texas. This provides users in the US Southwest with access to the following features:

- Pre-configured, API-driven dedicated servers deployed in minutes, accessible in 10 milliseconds.

- Access to 5G, supporting future-ready edge workloads.

- Automated infrastructure management through certified IaC tools (Terraform, Ansible, Pulumi).

- Multiple virtual layer 3 private connectivity with Megaport Cloud Router (MCR).

- 15 TB free bandwidth on a 20 Gbps network with free DDoS protection.

- High-speed NVMe storage and easy access to petabytes of S3-compatible cloud object storage.

- Flexible billing and bandwidth options with discounts for monthly or yearly reservations.

Through high-performance hardware and software technologies offered as a service, platforms like Bare Metal Cloud help businesses improve time to action and avoid data transfer bottlenecks. Edge workloads benefit from automation-driven infrastructure provisioning and support for containerized applications and microservices. At the same time, secure, single-tenant resources, and single-pane-of-glass monitoring and access allow for full infrastructure control. Finally, leveraging pre-configured servers eliminates the need for rack, power, cooling, security, or other infrastructure upkeep factors, letting in-house teams focus on application optimization instead.

Stay competitive and ensure real-time responsiveness for your edge applications.

Deploy your Bare Metal Cloud Edge Server in Austin, Texas today!

Conclusion

Wireless communication technologies, IoT devices and edge computing keep evolving, beckoning one another into new breakthroughs while creating new challenges to tackle. As more data gets generated and distributed throughout the IoT ecosystem, compute, storage, and networking technologies need to follow and adapt to the needs of the workloads of the future. Through partnerships between different IT vendors and service providers, solutions such as edge data centers emerge. By bringing secure, high-performance data transfer, storage, and analytics right at the birthplace of data, they help organizations overcome their edge computing struggles.