Top 8 DevOps Trends for 2021

DevOps is a leading model for efficient software delivery, and the field shows no signs of stagnation. The DevOps community is always searching for ways to speed-optimize development and be more productive, so shifts in mindsets and processes are a natural part of DevOps-centric software development.

This article examines DevOps trends for 2021.

Read on to learn what to expect from DevOps in the next year and see what your team requires to stay competitive.

Trends in DevOps to Keep an Eye On

1. Maturation of Infrastructure Automation (IA) Tools

Infrastructure automation tools enable teams to design and automate delivery services both in on-premise and cloud setups. In 2021, DevOps teams will use IA to automate delivery, configuration, and management of IT infrastructure at scale and with more reliability.

IA tools offer many benefits to DevOps teams:

- Multi and hybrid cloud infrastructure orchestration.

- Support for immutable and programmable infrastructure.

- Self-service, on-demand environment creation.

- Efficient resource provisioning.

- Ease of experimentation.

We will see more integrations of IA tools with other pipeline components in the future. Teams will enjoy more agility by applying CI/CD concepts to IT infrastructures.

Learn the difference between continuous integration, deployment, and delivery, three practices that allow DevOps teams to work with speed and precision.

What to expect in 2021: Companies will start replacing custom setups with enterprise-level IA tools. By automating the deployment and configuration of software with IA tools, organizations will gain:

- Faster deployments.

- Repeatable, consistent infrastructure.

- Cost-reductions due to less manual tasks.

- Easier compliance thanks to the reliable settings across all physical and virtual infrastructures.

Expect to see an increase in continuous configuration automation (CCA) tools too. These tools provide the ability to manage and deliver configuration changes as code. The scope of CCA tools will continue to expand into networking, containers, compliance, and security.

Learn how bare metal cloud servers help automate the infrastructure provisioning process.

2. The Use of Application Release Orchestration (ARO) Tools

ARO tools combine pipeline and environment management with release orchestration. These tools offer the following benefits:

- More agility: The team delivers new applications, changes, and bug fixes more quickly and reliably.

- Better productivity: Less manual tasks allows the staff to focus on high-value tasks.

- Higher visibility: Bottlenecks and wait-states become visible during provisioning.

ARO tools will further improve the quality and velocity of releases. Companies will scale release activities across multiple teams, methods, DevOps pipelines, processes, and tools.

What to expect in 2021: ARO tools will become significantly more common. Faster delivery of new code will allow organizations to respond to changing market demands quickly.

3. More Complex Toolchains

A DevOps toolchain is a set of tools that support pipeline activity. A well-designed toolchain enables team members to:

- Work together with common objectives.

- Precisely measure metrics.

- Have fast feedback on all code changes.

DevOps toolchains are becoming more complex and broader. CI tooling is evolving with new systems that make it easy to create and maintain a build script. Pipelines are gaining new security features. Tools supporting package management and containers are also progressing rapidly.

Organizations have to ensure optimal toolchain usage by avoiding overlaps, conflicts, and functionality gaps.

What to expect in 2021: Toolchain vendors will begin offering broader solutions across the development and delivery cycle. Companies will have more than one toolchain to support different stacks and delivery platforms (COTS, cloud, mainframe, container-native, etc.).

4. The Rise of DevSecOps

Cloud-native security will become more critical as organizations embrace Kubernetes, serverless, and other cloud-based technologies. Teams need new tools and processes to protect assets, which is why we predict a wide adoption of DevSecOps in the coming year.

DevSecOps is the integration of security and compliance testing into development pipelines. DevSecOps should:

- Seamlessly integrate into the life cycle.

- Provide transparent results to relevant stakeholders.

- Not reduce the agility of developers.

- Not require teams to leave their development environment.

- Provide security protection at runtime.

DevSecOps is becoming more programmable, so expect to see higher levels of automation in the following year.

Read about DevOps security best practices and ensure your team is operating safely and reliably.

What to expect in 2021: Security will no longer be an afterthought in DevOps pipelines. DevSecOps offerings will integrate with standard CI/CD testing tools at a higher rate. As a result, companies will see improvements in terms of cybersecurity, compliance, rules and protocol enforcement, and overall IT effectiveness.

5. Application Performance Monitoring (APM) Software

APM plays a crucial role in providing rapid feedback to developers during deployments. APM software includes:

- Front-end monitoring (observes the performance and behavior of user interactions).

- Application discovery, tracing, and diagnostics (ADTD analyzes the relations between web and application servers, microservices, and infrastructure).

- AIOps-powered analytics (detects patterns, anomalies, and causality across the life cycle).

In 2021, APM will be crucial to shortening MTTR (Mean Time to Repair), preserving service availability, and improving user experience. Advanced APM capabilities will help DevOps teams:

- Better understand business processes.

- Deliver insights into business operations.

- Help with problem isolation and prioritization.

What to expect in 2021: APM vendors will further expand their offerings to include integrated infrastructure monitoring and analysis (including networks, servers, databases, logs, containers, microservices, and cloud services).

Vendors will also continue to use machine learning (ML) in APM to:

- Reduce system noise.

- Predict and detect anomalies.

- Determine causality.

The rising emphasis on customer experience will drive APM software to provide insight into the customer journey. Organizations will begin relying more on APM software to protect and understand their applications.

6. A Wider Scope of Cloud Management Platforms (CMPs)

Cloud management platforms (CMPs) help teams manage public, private, and multi-cloud services and resources. CMP functionalities can be a result of a single product or a set of vendor offerings.

In 2021, organizations will start to use CMPs to reduce operational costs and ensure adequate service levels. CMPs will offer many functionalities to businesses:

- Provisioning and orchestration.

- Service request management.

- Inventory and classification.

- Cloud monitoring and analytics.

- Resource optimization.

- Cloud migration, backup, and disaster recovery.

- Enforcing policy and compliance requirements.

The ability of a CMP to serve both developer and I&O (Infrastructure and Operations) staff will be essential in 2021. A CMP must:

- Link into the development process without hurting agility.

- Allow I&O teams to enforce provisioning standards easily.

What to expect in 2021: Companies will get a better understanding of where CMP tooling can and cannot offer results. Enterprises will deploy CMPs to increase the agility of their DevOps team.

Read about the five different cloud deployment models and find the one that fits your business needs.

7. More Uncertain Goals and Requirements

Bimodal IT operations enable I&O teams to support users by analyzing the certainty of their requirements. Bimodal IT relies on two work style modes:

- Mode 1: The team knows the requirements and expects them to lead to predictable IT services or products.

- Mode 2: Requirements are uncertain and demand exploration is in progress. Results are hard to predict.

There will be an increase in business opportunities that embrace Mode 2. These strategies involve high levels of uncertainly, both in business and IT terms. Companies will prioritize agility and mean time to value for project and product teams to pursue new strategies and improve user experience.

What to expect in 2021: I&O teams will have to learn new skills to increase agility and improve business outcomes. Changes to current processes are also likely as Mode 2 opportunities require a more streamlined approach.

8. Further Growth of AgileOps

AgileOps is a set of proven agile and DevOps methods that I&O uses to improve agility. AgileOps techniques help streamline both software development and tasks in other business fields:

- To support development, I&O team members should learn DevOps and agile practices.

- For use cases not involving development, team members should know the concepts of Kanban, Gemba Kaizen, and extensive automation.

- Learning scrum, lean processes, and continuous improvement will help I&O improve product management techniques.

What to expect in 2021: The rising need to respond to user requirements quickly will drive the growth of AgileOps. I&O team members will use agile, lean, and DevOps concepts to gain more agility in fields not involving application development.

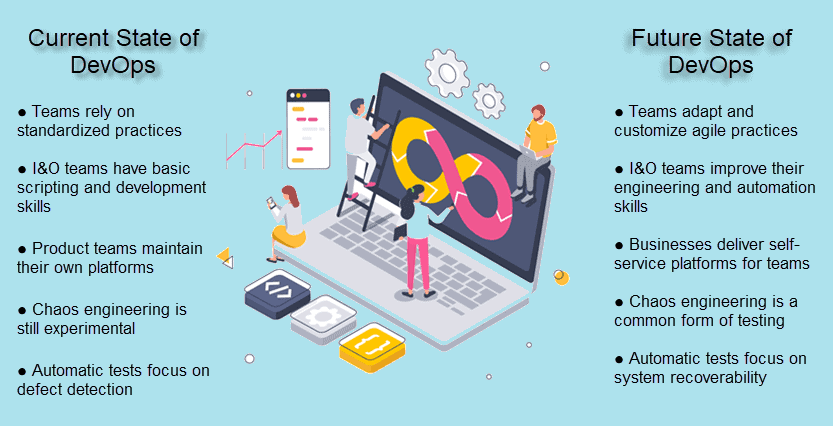

The Future of DevOps in 2021 (and Beyond)

Template-Based Practices Become a Constraint

Successful DevOps requires teams to self-organize and adjust their processes to specific product needs. DevOps teams will start to evolve standardized methods and frameworks into customized ways of working.

By 2023, 75% of companies will adjust agile practices to match the product and team contexts. As a result, application delivery cadence will rise. We will also see a rise in emerging techniques that emphasize practices over methods, such as Essence and Disciplined Agile.

Main effects:

- Assignments to a specific product (or a group of related products) will last longer.

- Familiarity with the product will increase team effectiveness.

- Continuous learning and adaptation become even more significant to agile and DevOps.

- Teams will start describing ways of working through practice-oriented techniques.

Team recommendations:

- Set guidelines but allow the team to choose its practices and ways of working.

- Ensure the team understands how agile development works before customizing procedures.

- Organize workshops to share knowledge among colleagues.

- Experiment with practice-oriented techniques to record methodologies.

I&O Teams Will Become More Agile

The adoption of cloud-native architecture and programmable infrastructure will require I&O to become more agile. I&O will have to expand their development skills beyond basic scripting.

Reliability engineering requires I&O teams to interface with development and product teams more effectively. Addressing reliability challenges requires a solid understanding of system design and operations.

By 2023, 60% of I&O leaders will improve their development skills to support business innovation. I&O teams will become more adept at:

- System architecture.

- Artificial intelligence for IT operations (AIOps).

- Application development.

- Testing automation.

Main effects:

- Software engineering skills will enable I&O to drive business innovation.

- I&O will collaborate with development teams more than before.

- I&O will take advantage of new skills to drive efficiency and reduce technical debt.

Team recommendations:

- Build your I&O capability over time. Map your development needs and make a long-term plan on how to meet them.

- Find a balance between hiring new talent and in-house staff training.

- Pay attention to employee retention as the demand for engineering skills in I&O will exceed the supply.

Self-Service Platforms for Product Teams

Typically, product teams that maintain their infrastructure lack the time or expertise to optimize platform use. These teams must divert precious resources from user-focused innovation to platform maintenance, upgrades, and management.

By 2023, 70% of companies will deliver shared, self-service platforms for product teams. These platforms will improve application deployment frequency by 25%. Other benefits will include:

- Fewer toolchain overlaps.

- Consistent standards of governance and security.

- Higher customer satisfaction.

- Greater business agility.

Internal platforms will be more responsive and less constraining to product teams.

Main effects:

- Businesses respond faster to threats and opportunities.

- I&O team members will start to treat platforms as products that continually improve as business needs change.

- Companies will reduce overlap and redundancy, enable economies of scale, and establish high standards of governance.

Team recommendations:

- Build dedicated platform teams that will grant further agility to product teams.

- Organize communities of practices to ensure platforms meet all consumer requirements.

Chaos Engineering Becomes a Regular Testing Technique

By 2023, 40% of DevOps teams will be using chaos engineering as a standard part of their test suite. As a result, we will see a reduction in unplanned downtime by 20%.

Chaos engineering relies on fault injection to proactively find errors and bugs typically invisible to other testing strategies. Chaos experiments are ideal for complex IT systems with many moving parts.

Read more about chaos engineering and learn how unpredictable tests build up system resilience.

Main effects:

- Chaos experiments in preproduction will become a standard part of the continuous delivery process.

- Large enterprises will begin using chaos engineering to scale at a quicker pace.

Team recommendations:

- Create a community of practices to build chaos engineering awareness and skills.

- Train with open-source chaos engineering tools.

- Create reusable experiments to help different teams scale the method and build confidence with familiar tests.

Quick Failure Recoveries

To consistently deliver value to customers, applications must always be up and running. Failure recovery will be a big DevOps area of improvement in the coming years.

By 2023, 60% of organizations will be testing for system recoverability as a part of CI/CD pipelines.

Our guide to CI/CD explains how automated release pipelines benefit both DevOps teams and organizations.

Main effects:

- Recovery testing becomes a standard part of the test automation process.

- QA focuses more on defect remediation.

- Product teams will become more aware of the current levels of system resilience and reliability.

Team recommendations:

- Automate the entire process of incident handling as if the defect occurs in production.

- Ensure all incidents with failed system recovery go through root cause analysis.

- Extend QA mechanisms to include periodic validation and verification of system recoverability.

Check out our article Infrastructure in the Age of DevOps to stay up-to-date with the emerging trends and the benefits of adopting DevOps.

Adopt Early and Stay Ahead of the Competition

Companies that adopt these DevOps trends will improve their ability to design, build, deploy, and maintain quality software. Embracing these trends on time will also allow companies to remain competitive in another intense year for DevOps.